Understanding Hadoop Architecture: A Comprehensive Guide – Hadoop is a powerful framework designed for processing and storing large datasets across distributed computing environments. Its architecture is pivotal to its ability to handle massive volumes of data efficiently. This article delves into the key components and architecture of Hadoop, providing an in-depth understanding of how it operates.

Core Components of Hadoop Architecture

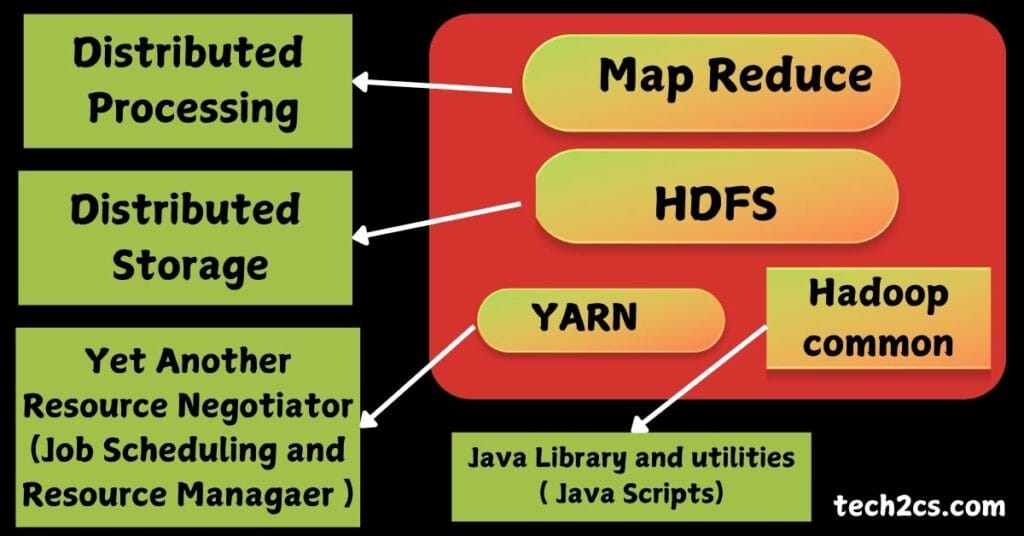

Hadoop architecture consists of four primary components:

- Hadoop Common: This is the foundational layer that provides the essential libraries and utilities required by other Hadoop modules. It includes file system libraries and other necessary files that support the operation of the entire Hadoop ecosystem.

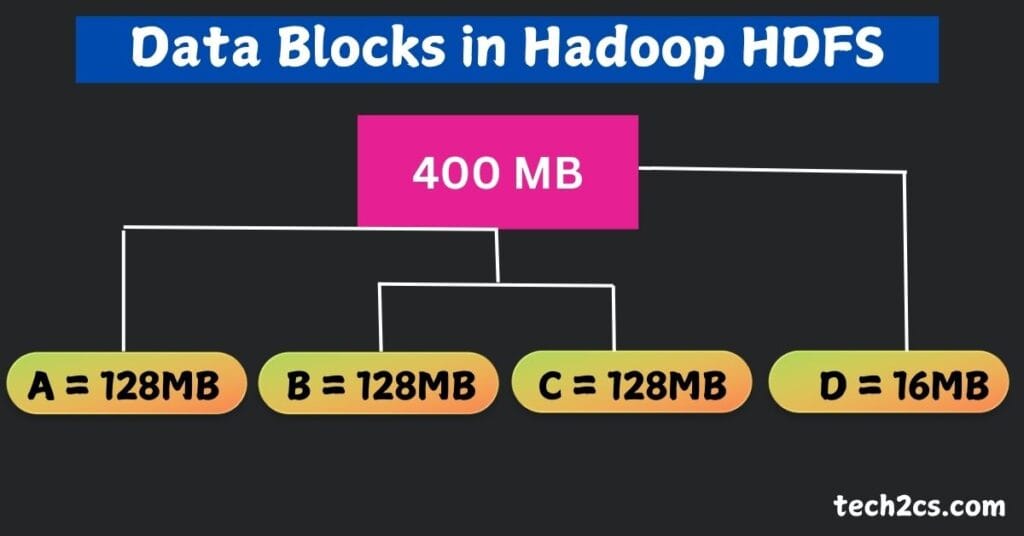

- Hadoop Distributed File System (HDFS): HDFS is the storage component of Hadoop. It is designed to store vast amounts of data reliably and to stream those data to user applications. HDFS breaks large files into smaller blocks (default size: 128 MB or 256 MB) and distributes them across various nodes in a cluster, ensuring data redundancy and fault tolerance.

- Hadoop YARN (Yet Another Resource Negotiator): YARN is the resource management layer of Hadoop. It manages and schedules resources across the cluster, allowing multiple applications to run simultaneously without interfering with each other. YARN separates the resource management and job scheduling components, enhancing scalability and resource utilization.

- Hadoop MapReduce: This is the processing engine of Hadoop. MapReduce is a programming model that enables the processing of large data sets in a distributed manner. It consists of two primary functions: the Map function, which processes input data and produces key-value pairs, and the Reduce function, which takes the output from the Map function and aggregates it to produce the final result.

Hadoop Cluster Architecture | Understanding Hadoop Architecture: A Comprehensive Guide

A Hadoop cluster typically consists of two types of nodes:

- Master Node: This node manages the cluster and coordinates the tasks. It has several critical components:

- NameNode: This is the master server responsible for managing the HDFS namespace and controlling access to files by clients. It keeps track of the metadata of the files stored in HDFS, such as file names, permissions, and the locations of data blocks.

- ResourceManager: This is part of YARN and manages the allocation of resources for applications. It maintains the cluster’s resource capacity and schedules jobs.

- Worker Nodes (DataNodes): These nodes are responsible for storing the actual data and performing computations. Each DataNode is responsible for serving read and write requests from clients and for reporting back to the NameNode about the data blocks it stores. DataNodes handle tasks assigned by the ResourceManager and execute the MapReduce jobs.

Data Flow in Hadoop

The data flow in a Hadoop architecture can be summarized as follows:

- Data Ingestion: Data is ingested into HDFS from various sources, such as databases, log files, and real-time streaming data.

- Data Storage: The ingested data is split into blocks and distributed across the DataNodes. HDFS replicates these blocks (default replication factor is three) to ensure data durability and availability.

- Data Processing: When a MapReduce job is executed, the ResourceManager allocates resources, and the necessary tasks are dispatched to the DataNodes. Each DataNode processes its assigned data blocks and sends the intermediate results back to the ResourceManager.

- Data Output: The final results are aggregated by the Reduce function and written back to HDFS or another storage system for further analysis or reporting.

1. MapReduce

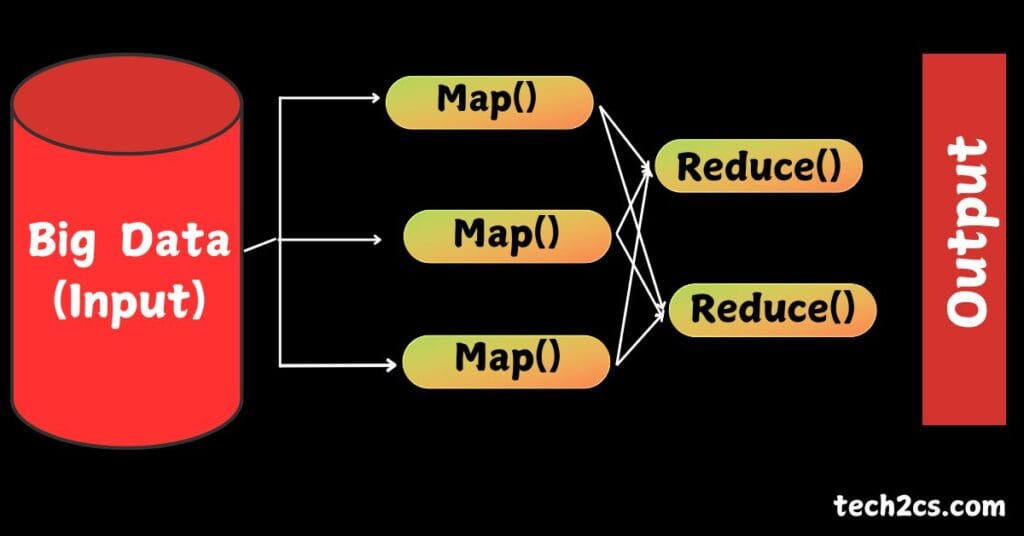

In the Hadoop ecosystem, the MapReduce programming model is essential for processing large data sets. This model is divided into two primary tasks: the Map task and the Reduce task. Below, we explore each task in detail, including their functions, processes, and roles within the Hadoop architecture.

Map Task

The Map task is the first stage of the MapReduce process. Its primary role is to process input data and produce intermediate key-value pairs. Here’s a closer look at how it operates:

- Input Splitting: Before the Map task begins, Hadoop divides the input data into smaller, manageable pieces called splits or blocks (typically 128 MB or 256 MB). Each split is processed independently by a separate Map task.

- Processing the Input Data: Each Map task reads the data from its assigned split. The input format determines how the data is read and structured. The data is processed line by line or record by record, and the Map function is applied to each input record.

- Generating Key-Value Pairs: The core function of the Map task is to transform the input data into a set of intermediate key-value pairs. This transformation is determined by the user-defined Map function. For example, in a word count application, the input might be a list of words, and the Map function would produce key-value pairs where the key is the word and the value is the count (typically 1).

- Outputting Intermediate Data: Once the Map function processes the data, the intermediate key-value pairs are written to the local disk of the worker node running the Map task. These pairs are then shuffled and sorted to prepare for the Reduce task.

Example of a Map Task

In a word count example, if the input data contains the following lines:

Hello world

Hello Hadoop

Understanding Hadoop Architecture: A Comprehensive GuideThe Map task would produce the following key-value pairs:

(Hello, 1)

(world, 1)

(Hello, 1)

(Hadoop, 1)

Understanding Hadoop Architecture: A Comprehensive Guide

Reduce Task

The Reduce task is the second stage of the MapReduce process. Its primary role is to aggregate and summarize the intermediate key-value pairs produced by the Map task. Here’s how the Reduce task operates:

- Shuffling and Sorting: After the Map tasks complete, Hadoop shuffles and sorts the intermediate key-value pairs. This process groups all values associated with the same key together and prepares them for the Reduce task.

- Input to the Reduce Function: Each Reduce task receives a unique key along with a list of values corresponding to that key. The Reduce function is then applied to these key-value pairs.

- Aggregating Results: The Reduce function processes the list of values for each key. It can perform various operations, such as summing the values, finding averages, or concatenating strings. The result of this processing is typically a smaller set of key-value pairs.

- Writing Final Output: Finally, the Reduce task writes the output key-value pairs to HDFS or another storage system, making them available for further analysis or reporting.

Example of a Reduce Task

Continuing with the previous word count example, the Reduce task would take the intermediate pairs produced by the Map task:

(Hello, [1, 1])

(world, [1])

(Hadoop, [1])

The Reduce task would then process these to produce:

(Hello, 2)

(world, 1)

(Hadoop, 1)

2. HDFS

HDFS Architecture

HDFS has a master/slave architecture consisting of two primary types of nodes:

- NameNode (Master Node): The NameNode is the central component of HDFS responsible for managing the file system namespace and controlling access to files by clients. It stores metadata about the files, including:

- The file names and directory structure.

- The block locations for each file.

- Permissions and access controls.

- DataNodes (Slave Nodes): DataNodes are the worker nodes in the HDFS architecture that store the actual data blocks. Each DataNode is responsible for:

- Serving read and write requests from clients.

- Performing data replication as instructed by the NameNode.

- Reporting back to the NameNode with the status of the data blocks it stores.

Data Storage and Replication

When a file is uploaded to HDFS, it undergoes the following processes:

- File Splitting: HDFS divides the file into smaller blocks (default size is 128 MB or 256 MB) for easier management and distribution.

- Block Replication: Each block is replicated across multiple DataNodes (the default replication factor is three) to ensure fault tolerance. This means that even if a DataNode fails, the data can still be accessed from another DataNode that has a copy of the block.

- Data Placement Policy: HDFS employs a data placement policy that ensures blocks are stored on different nodes, minimizing the risk of data loss due to hardware failure.

HDFS Operations | Understanding Hadoop Architecture: A Comprehensive Guide

HDFS supports various operations, including:

- File Creation: Clients can create new files in HDFS by writing data to it. HDFS ensures the data is divided into blocks and stored across DataNodes.

- File Reading: Clients can read files from HDFS. The system retrieves the necessary blocks from the appropriate DataNodes, allowing for efficient data access.

- File Deletion: HDFS allows clients to delete files, which will remove the metadata from the NameNode and the associated blocks from the DataNodes.

- File Modification: HDFS is optimized for write-once, read-many access. While files cannot be modified after creation, clients can append data to existing files.

3. YARN Architecture

YARN’s architecture consists of several key components:

- ResourceManager: The ResourceManager is the master daemon responsible for managing the allocation of resources across the cluster. It has two main components:

- Scheduler: The Scheduler allocates resources to applications based on a specific scheduling policy (e.g., FIFO, Capacity, or Fair Scheduler). It does not consider the application’s data locality; instead, it focuses on maximizing resource utilization.

- ApplicationManager: This component manages the lifecycle of applications and handles application submission, monitoring, and resource allocation requests.

- NodeManager: Each node in the YARN cluster runs a NodeManager daemon, which is responsible for managing resources on that node. The NodeManager performs several functions:

- Monitoring resource usage (CPU, memory, disk) for the containers running on the node.

- Reporting resource availability to the ResourceManager.

- Managing the execution of containers (isolated environments where tasks run).

- ApplicationMaster: For each application submitted to the cluster, an ApplicationMaster is created. It is responsible for:

- Negotiating resources with the ResourceManager.

- Monitoring the progress of tasks and handling task failures.

- Coordinating the execution of the application’s tasks and managing their lifecycle.

- Container: A container is a logical representation of the resources allocated to a task (CPU, memory). Each container runs a specific task of the application, and multiple containers can run on a single NodeManager.

YARN Data Flow

The data flow in a YARN-based system can be summarized in the following steps:

- Application Submission: A user submits an application to the ResourceManager, which creates an ApplicationMaster for the application.

- Resource Allocation: The ApplicationMaster requests resources for the application from the ResourceManager. The ResourceManager allocates containers based on the application’s requirements and current resource availability.

- Task Execution: Once containers are allocated, the ApplicationMaster launches tasks in the allocated containers, which run on the respective NodeManagers.

- Monitoring and Management: The ApplicationMaster monitors the execution of tasks, handles any failures, and can request additional resources if needed.

- Completion: After all tasks are completed, the ApplicationMaster notifies the ResourceManager, which frees up the allocated resources for future applications.

4 . Hadoop Common

Hadoop Common is a foundational component of the Hadoop ecosystem, providing essential libraries and utilities required by other Hadoop modules, such as HDFS (Hadoop Distributed File System) and YARN (Yet Another Resource Negotiator). Here’s a concise overview of its key features and importance:

Key Features

- Shared Libraries: Provides libraries and utilities for other Hadoop components, promoting code reuse and reducing redundancy.

- Interoperability: Ensures seamless communication between different Hadoop modules, enabling them to work together effectively.

- Utilities: Contains command-line tools and scripts for file management, configuration, and data processing.

- Configuration Management: Offers XML-based configuration files that define settings for various Hadoop components, allowing customization.

- Support for Multiple File Systems: Facilitates interaction with various storage systems, including HDFS, Amazon S3, and local file systems.

Importance

- Foundation for Hadoop Components: Serves as the core library layer for all Hadoop modules, ensuring their stability and reliability.

- Simplifies Development: Provides a common set of tools, allowing developers to focus on application building without complexity.

- Enhances Performance: Optimizes data serialization and I/O operations, improving the performance of big data workflows.

- Promotes Best Practices: Standardizes configuration and library structure, facilitating effective implementation and maintenance of big data solutions.

Advantages of Hadoop Architecture | Understanding Hadoop Architecture: A Comprehensive Guide

- Scalability: Hadoop can easily scale horizontally by adding more nodes to the cluster, allowing it to handle increasing data volumes.

- Cost-Effective: Hadoop is designed to run on commodity hardware, making it a cost-effective solution for big data processing.

- Fault Tolerance: With its data replication feature, Hadoop ensures that data remains available even if a node fails.

- Flexibility: Hadoop can process various types of data, including structured, semi-structured, and unstructured data.

Understanding Hadoop Architecture: A Comprehensive Guide , Hadoop’s architecture is a robust solution for managing and processing large datasets in a distributed environment. Its modular design, combining HDFS, YARN, and MapReduce, provides a powerful framework that scales efficiently while ensuring data reliability and fault tolerance. As organizations continue to grapple with big data challenges, understanding and leveraging Hadoop’s architecture will be crucial in unlocking the value of their data.