Hadoop Ecosystem – The Hadoop ecosystem is a suite of tools and frameworks designed to process and analyze massive amounts of data efficiently. It is built on the core of Apache Hadoop, which includes HDFS (Hadoop Distributed File System) for storage and MapReduce for distributed data processing. The ecosystem extends Hadoop’s capabilities, making it a powerful platform for big data solutions.

Here’s a comprehensive analysis of the Hadoop Ecosystem, including its benefits, advantages, disadvantages, and more.

What is the Hadoop Ecosystem? | Hadoop Ecosystem

The Hadoop Ecosystem is an open-source framework designed to process, store, and analyze vast amounts of data across distributed computing clusters. It comprises various tools and technologies like HDFS, MapReduce, YARN, Hive, Spark, Pig, and more, each serving specific roles in big data workflows.

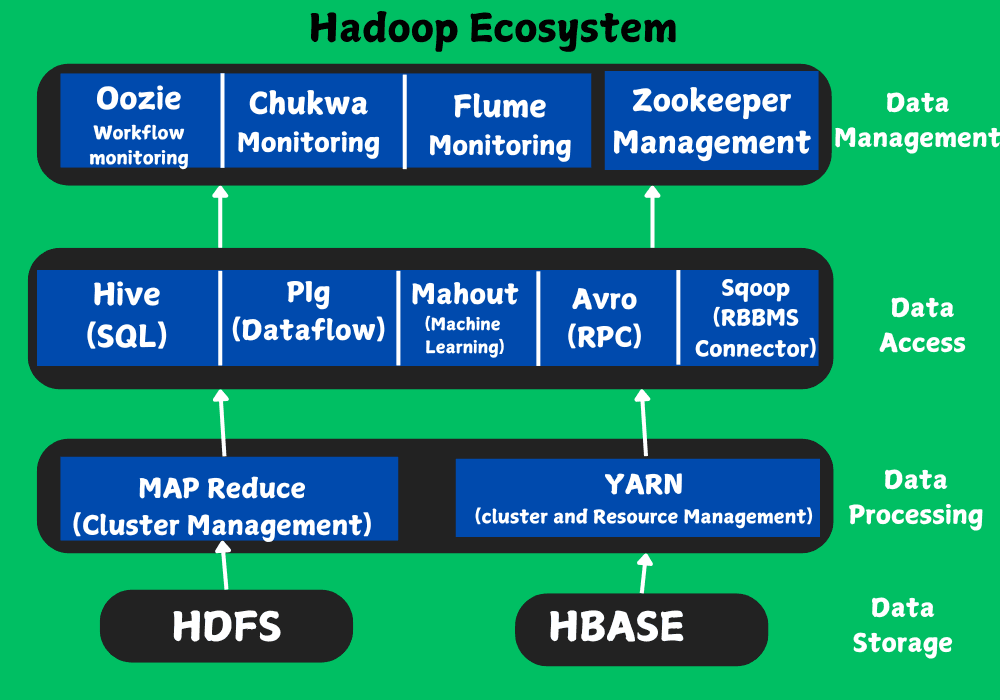

Key Components of the Hadoop Ecosystem:

- Hadoop Distributed File System (HDFS)

- A distributed file storage system designed to store large datasets across multiple nodes.

- Ensures high fault tolerance and scalability.

- MapReduce

- A programming model for processing large datasets in parallel across a Hadoop cluster.

- It consists of two phases: the Map phase (data filtering and sorting) and the Reduce phase (aggregation).

- YARN (Yet Another Resource Negotiator)

- Manages resources in a Hadoop cluster and schedules tasks.

- Allows multiple applications to run simultaneously on Hadoop.

- Hive

- A data warehouse infrastructure that uses SQL-like queries (HiveQL) to process data stored in HDFS.

- Designed for non-programmers to work with big data.

- Pig

- A scripting platform that simplifies writing MapReduce programs.

- Uses a high-level language called Pig Latin for data transformation and analysis.

- HBase

- A distributed, NoSQL database that runs on top of HDFS.

- Suitable for real-time read/write access to large datasets.

- Sqoop

- A tool for transferring data between Hadoop and relational databases.

- Supports import/export functionalities.

- Flume

- A data ingestion tool for collecting, aggregating, and moving large volumes of log data into Hadoop.

- Zookeeper

- A centralized service for maintaining configuration information, naming, and providing distributed synchronization.

- Oozie

- A workflow scheduler for managing Hadoop jobs.

- Supports sequential execution of tasks.

- Mahout

- A machine learning library designed to work on top of Hadoop.

- Provides algorithms for clustering, classification, and collaborative filtering.

- Spark

- A fast, in-memory data processing engine that complements Hadoop.

- Used for batch processing, real-time streaming, and iterative computations.

- Kafka

- A distributed messaging system used for real-time data streaming to Hadoop.

- HDFS Federation

- Improves the scalability and reliability of HDFS by enabling multiple namespaces.

Benefits of the Hadoop Ecosystem

- Scalability:

- Easily scales to accommodate increasing data sizes by adding nodes to the cluster.

- Handles petabytes or exabytes of data without requiring high-end hardware.

- Cost-Effectiveness:

- Operates on commodity hardware, reducing infrastructure costs.

- Open-source nature eliminates licensing fees.

- Fault Tolerance:

- Data is replicated across multiple nodes, ensuring no data loss in case of hardware failure.

- Automatic failover and recovery mechanisms.

- Flexibility:

- Can process structured, semi-structured, and unstructured data (e.g., logs, images, videos).

- Supports multiple data formats like JSON, XML, Avro, and Parquet.

- Efficiency in Data Processing:

- Distributed storage and processing minimize data movement and optimize speed.

- Tools like Spark provide in-memory processing, making computations faster.

- Wide Application Support:

- Integrates seamlessly with tools like Hive for data warehousing, Spark for real-time processing, and Mahout for machine learning.

Advantages of the Hadoop Ecosystem

1. Distributed Computing

- Hadoop processes data in parallel across multiple nodes, speeding up computations.

- It splits data into smaller chunks and processes them simultaneously, reducing bottlenecks.

2. High Fault Tolerance

- If a node fails, the system continues working with minimal disruption.

- Data replication ensures availability and reliability.

3. Open-Source Community

- Supported by a large and active community.

- Frequent updates and new tools enhance its functionality.

4. Versatility

- Supports batch processing (MapReduce), real-time streaming (Kafka and Spark), and advanced analytics (Hive and Pig).

- Tools like HBase provide NoSQL database capabilities, expanding its use cases.

5. Broad Use Cases

- Used in industries like finance, healthcare, retail, and telecommunications for analytics, fraud detection, recommendation systems, and more.

Disadvantages of the Hadoop Ecosystem

1. Complexity

- Requires specialized skills in Java, Hadoop, and associated tools.

- Setting up and managing clusters can be technically challenging.

2. Security Concerns

- The default security model in Hadoop is basic and lacks advanced encryption.

- Secure authentication requires additional configurations like Kerberos.

3. High Latency in Batch Processing

- MapReduce jobs may have higher latency due to their reliance on disk I/O.

- Unsuitable for applications needing low-latency responses.

4. Resource Intensive

- Demands significant storage and compute resources, especially for large-scale implementations.

- Requires careful optimization to avoid performance bottlenecks.

5. Dependency on Commodity Hardware

- Although cost-effective, commodity hardware may fail more often, necessitating frequent maintenance.

Applications of the Hadoop Ecosystem:

- Data Analytics: Processing and analyzing structured, semi-structured, and unstructured data.

- Machine Learning: Building predictive models using Mahout or Spark MLlib.

- Log Processing: Using Flume or Kafka for real-time log collection and analysis.

- ETL Processes: Extracting, transforming, and loading data using Sqoop and Hive.

- Data Storage: Using HDFS for scalable and fault-tolerant data storage.

The Hadoop ecosystem provides a robust framework for solving various big data challenges, making it a cornerstone of modern data-driven enterprises.

FAQs

What is the Hadoop Ecosystem?

The Hadoop Ecosystem is a collection of tools and frameworks like HDFS, MapReduce, Hive, Spark, and more, designed for distributed storage and processing of large datasets.

What are the core components of Hadoop?

The core components are HDFS (for storage), MapReduce (for processing), and YARN (for resource management).

What are the main advantages of Hadoop?

Hadoop offers scalability, cost-effectiveness, fault tolerance, flexibility to handle various data types, and efficient distributed processing.

What are the limitations of Hadoop?

Key limitations include high latency for real-time processing, complexity in setup, security challenges, and inefficiency with small files.

Which industries use Hadoop?

Industries like finance, healthcare, retail, telecommunications, and technology use Hadoop for analytics, fraud detection, recommendation systems, and large-scale data processing.