MapReduce in Hadoop – MapReduce is a programming model and processing technique used in Hadoop for big data analytics. It allows for the distributed processing of large datasets across clusters of computers. The main goal of MapReduce is to enable scalable and fault-tolerant processing of data.

Key Components of MapReduce | MapReduce in Hadoop

- Map Function:

- The process begins with the Map phase, where the input data is divided into smaller, manageable chunks.

- Each chunk is processed by a map function, which transforms the input data into a set of key-value pairs.

- The output of the map function is an intermediate set of key-value pairs.

- Shuffle and Sort:

- After the Map phase, the intermediate key-value pairs are shuffled and sorted.

- This step groups all values associated with the same key together, preparing them for the Reduce phase.

- Reduce Function:

- The Reduce phase takes the grouped key-value pairs from the shuffle and sort step.

- The reducer processes each group, applying a reduce function that typically aggregates or summarizes the data.

- The final output is a set of key-value pairs that represent the processed results.

MapReduce in Hadoop – Detailed Explanation with Example

MapReduce is a powerful programming model used for processing large datasets in a distributed computing environment like Hadoop. It consists of two main tasks: the Map task and the Reduce task, which work together to transform input data into meaningful output.

How MapReduce Works – MapReduce in Hadoop

- Input Data:

- Data is stored in the Hadoop Distributed File System (HDFS). The input data can be in various formats, such as text files, CSV, or JSON.

- Map Phase:

- In this phase, the input data is divided into smaller blocks (default is 128 MB).

- Each block is processed by a Map function, which produces key-value pairs as output.

- The Map function reads the input data, processes it, and emits intermediate key-value pairs.

- Shuffle and Sort Phase:

- After the map phase, Hadoop shuffles the data based on the keys produced by the Map function.

- This phase groups all the values associated with the same key together and sorts them.

- Reduce Phase:

- The Reduce function takes the grouped key-value pairs as input and processes them to produce a smaller set of output data.

- The Reducer aggregates the values associated with each key and emits a final key-value pair.

- Output Data:

- The output from the Reduce phase is written back to HDFS, where it can be used for further analysis or reporting.

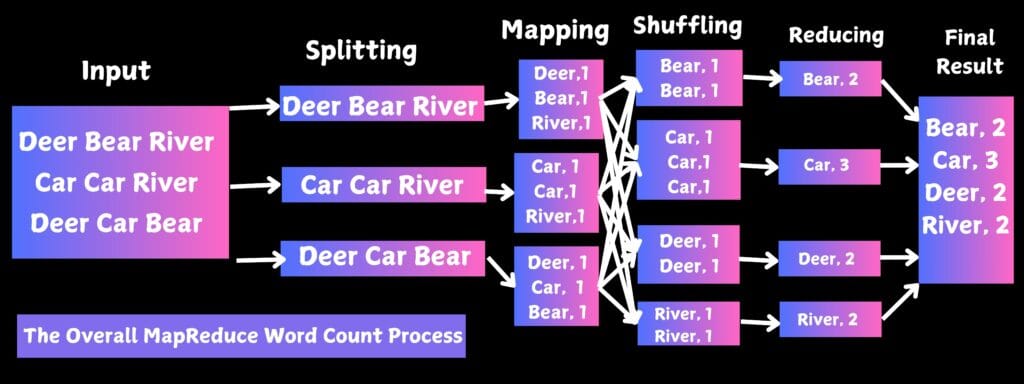

Example: Word Count

One of the simplest and most commonly used examples of MapReduce is the Word Count program. This program counts the number of occurrences of each word in a large text file.

Step 1: Input Data

Consider the following text as input data stored in a file named input.txt:

Hello Hadoop

Hello MapReduce

Hadoop is great

MapReduce is powerful

Step 2: Map Function

The Map function processes the input data. For the Word Count example, the Map function emits key-value pairs, where the key is a word and the value is the number 1.

Map Function Implementation (Pseudo Code):

def map_function(line):

for word in line.split():

emit(word, 1)

Intermediate Output from Map Phase:

| Key | Value |

|---|---|

| Hello | 1 |

| Hadoop | 1 |

| Hello | 1 |

| MapReduce | 1 |

| Hadoop | 1 |

| is | 1 |

| great | 1 |

| MapReduce | 1 |

| is | 1 |

| powerful | 1 |

Step 3: Shuffle and Sort Phase

In this phase, Hadoop groups the intermediate key-value pairs by key and sorts them. The output looks like this:

| Key | Values |

|---|---|

| Hello | [1, 1] |

| Hadoop | [1, 1] |

| MapReduce | [1, 1] |

| is | [1, 1] |

| great | [1] |

| powerful | [1] |

Step 4: Reduce Function

The Reduce function processes the grouped data to count the occurrences of each word.

Reduce Function Implementation (Pseudo Code):

def reduce_function(word, counts):

total = sum(counts)

emit(word, total)

Final Output from Reduce Phase:

| Key | Value |

|---|---|

| Hello | 2 |

| Hadoop | 2 |

| MapReduce | 2 |

| is | 2 |

| great | 1 |

| powerful | 1 |

Step 5: Output Data

The final output is typically stored in HDFS in a specified output directory, where each word and its count can be accessed for further analysis.

Advantages of MapReduce | MapReduce in Hadoop

- Scalability: MapReduce can process petabytes of data by distributing tasks across a large number of nodes.

- Fault Tolerance: If a node fails, tasks can be automatically redistributed to other nodes, ensuring the process continues.

- Cost-Effective: Hadoop, which utilizes MapReduce, can run on commodity hardware, making it a cost-effective solution for big data analytics.

Use Cases

- Data mining and processing.

- Log analysis.

- Indexing large datasets for search engines.

- Analyzing social media data.

MapReduce plays a crucial role in the Hadoop ecosystem, enabling organizations to analyze vast amounts of data efficiently, uncover insights, and make data-driven decisions.

Conclusion

MapReduce in Hadoop, MapReduce is a powerful model that allows for the distributed processing of large datasets efficiently. In the Word Count example, it demonstrates how input data is processed in parallel, leading to a scalable solution for big data analytics. This model can be applied to various applications, including data analysis, ETL processes, and machine learning tasks, making it a foundational concept in the Hadoop ecosystem.

watch below video for your more understanding , Video Credit:- Gate Smashers

FAQ’s

1. What is MapReduce?

A programming model for processing large datasets in Hadoop, consisting of Map and Reduce phases.

2. How does the Map phase work?

The Map phase processes input data, emitting key-value pairs as output.

3. What happens during the Shuffle and Sort phase?

Hadoop redistributes and groups intermediate key-value pairs by key, preparing them for the Reduce phase.

4. What is the role of the Reduce phase?

The Reduce phase aggregates values for each key and produces the final output.

5. What are some use cases for MapReduce?

Common use cases include log analysis, data mining, and processing large-scale datasets for machine learning and analytics.