Concept Of Hadoop | What is Hadoop? , meaning of Hadoop – In the era of big data, efficient storage and processing of large datasets have become crucial for businesses. Hadoop, an open-source software framework, has emerged as a game-changer in managing massive amounts of data in distributed computing environments. Designed to handle data of all scales, Hadoop’s framework is built on the MapReduce programming model, which enables parallel processing of datasets across multiple nodes.

A Brief History of Hadoop | meaning of Hadoop

Hadoop originated from the work of Doug Cutting and Mike Cafarella under the Apache Software Foundation. Named after Cutting’s son’s toy elephant, Hadoop was inspired by Google’s 2003 paper on the Google File System (GFS) and MapReduce. The first version of Hadoop (0.1.0) was released in April 2006. Since then, it has become a cornerstone of big data technology, adopted by companies like Yahoo, Facebook, and IBM for applications ranging from data warehousing to research.

What is Hadoop?

Hadoop is a powerful framework for storing and processing vast datasets. Written primarily in Java, with some components in C and shell scripting, it is known for its scalability, fault tolerance, and cost-effectiveness.

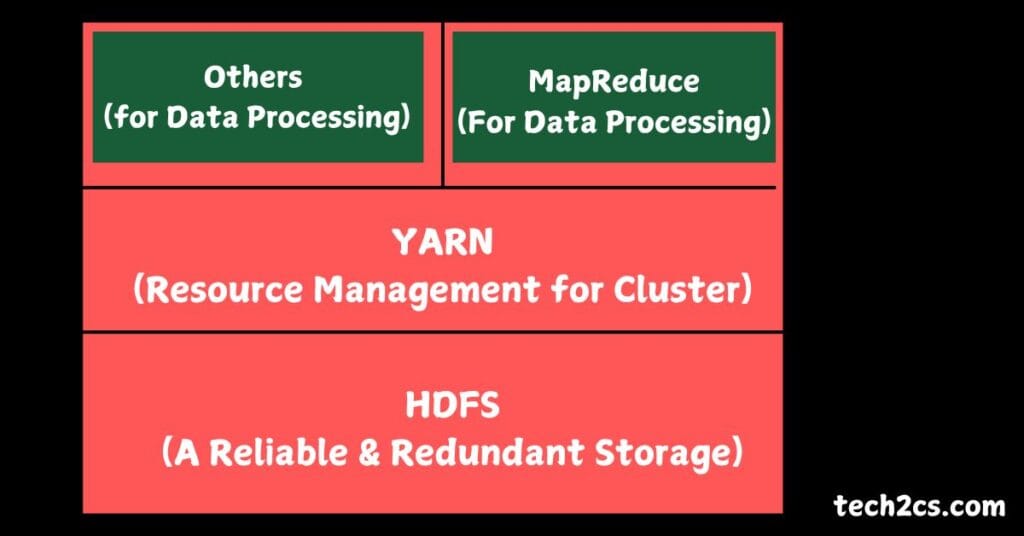

Core Components of Hadoop

a. Hadoop Distributed File System (HDFS):

HDFS is Hadoop’s storage layer and provides:

- Distributed Storage: It splits large files into smaller blocks (default size is 128MB or 256MB) and stores them across multiple nodes in the cluster.

- Data Replication: Ensures fault tolerance by replicating each data block to multiple nodes (default is 3 replicas).

- Write Once, Read Many: Optimized for high-throughput access to large files.

b. MapReduce:

MapReduce is Hadoop’s processing layer and provides:

- Distributed Processing: Breaks down large data processing tasks into smaller, manageable chunks.

- Two Key Phases:

- Map Phase: Processes input data and transforms it into intermediate key-value pairs.

- Reduce Phase: Aggregates the results from the Map phase to generate the final output.

- Example:

- Task: Count the number of occurrences of each word in a file.

- Map Step: Splits the text into words and emits

(word, 1)for each occurrence. - Reduce Step: Sums up all the counts for each word.

c. YARN (Yet Another Resource Negotiator):

YARN is Hadoop’s resource management layer and provides:

- Resource Allocation: Dynamically allocates resources (CPU, memory) to different applications.

- Job Scheduling: Manages the execution of multiple tasks in the cluster.

d. Hadoop Common:

This is a collection of shared libraries and utilities required by other Hadoop modules.

Key Features of Hadoop

Hadoop’s design makes it a preferred choice for big data processing:

- Distributed Storage: Data is stored across multiple nodes, ensuring scalability and fault tolerance.

- Fault-Tolerance: With data replication and automatic failure recovery, Hadoop ensures uninterrupted operations.

- Scalability: It can scale from a single server to thousands of machines.

- Data Locality: Processing occurs close to where the data is stored, improving efficiency.

- High Availability: Redundancy ensures data is always accessible.

- Flexibility: Hadoop supports structured, semi-structured, and unstructured data.

Hadoop Ecosystem Modules

Hadoop’s ecosystem includes:

- MapReduce: A programming model for parallel data processing.

- HDFS: Manages distributed file storage.

- YARN: Allocates and manages cluster resources.

- Common: Libraries and utilities supporting other Hadoop modules.

Other popular frameworks in the ecosystem are:

- Hive: SQL-like querying for data in HDFS.

- Spark: Fast data processing with built-in machine learning capabilities.

- Pig: Simplified data transformation using Pig Latin.

- Tez: Optimized execution of Hive and Pig workflows.

Advantages of Hadoop

- Scalability: Easily scales with data growth by adding nodes.

- Cost-Effectiveness: Runs on commodity hardware, reducing costs.

- Fault-Tolerance: Handles hardware failures without data loss.

- Flexibility: Processes all types of data formats.

- Community Support: A vibrant open-source community ensures continuous development.

Challenges and Disadvantages

Despite its strengths, Hadoop has some limitations:

- Inefficient for Small Data: It’s optimized for large-scale processing, making it less suitable for small datasets.

- Complex Setup: Requires expertise for installation and maintenance.

- Latency Issues: Not ideal for real-time processing.

- Security Concerns: Lacks built-in encryption and user authentication.

- High Resource Usage: Demands significant computing power and storage.

- Limited Ad-hoc Querying: The batch-oriented MapReduce model is less flexible for exploratory data analysis.

Real-World Use Cases of Hadoop

a. E-commerce and Retail

- Recommendation systems (e.g., Amazon, Flipkart).

- Customer sentiment analysis from social media and reviews.

b. Healthcare

- Genomic analysis and personalized treatment plans.

- Real-time monitoring of patients through IoT devices.

c. Finance

- Fraud detection using pattern recognition.

- Risk analysis for investments.

d. Telecommunications

- Call Data Record (CDR) analysis for network optimization.

- Predictive maintenance for equipment.

e. Media and Entertainment

- Analyzing user preferences to recommend movies and shows.

- Digital marketing campaign optimization.

Limitations of Hadoop

- Latency Issues: Not ideal for low-latency real-time processing tasks.

- Complexity: Requires expertise in Java, Linux, and cluster management.

- Small File Limitation: Performance degrades when handling a large number of small files.

- High Initial Setup Cost: Although it reduces costs in the long run, setting up a Hadoop cluster can be expensive initially.

Future of Hadoop

While new technologies like Apache Spark and Kubernetes are gaining traction, Hadoop remains integral for handling large-scale batch processing tasks. Its ecosystem continues to evolve, integrating with modern tools to stay relevant in big data analytics.

Hadoop revolutionized big data processing by enabling cost-effective, scalable, and reliable data management. While it may not be the best choice for small datasets or real-time applications, its robust ecosystem and distributed computing capabilities make it indispensable for large-scale data analytics. As technology evolves, Hadoop continues to adapt, solidifying its place as a cornerstone of the big data revolution. Concept Of Hadoop – Hadoop is a powerful open-source framework for distributed storage and processing of large-scale data sets. It has revolutionized the way organizations handle Big Data, enabling faster, scalable, and cost-effective data management.

FAQ’s

1. What is Hadoop used for?

Hadoop is used for storing and processing massive amounts of structured, semi-structured, and unstructured data in a distributed computing environment. It is commonly applied in fields like data analytics, machine learning, business intelligence, and big data processing.

2. How does Hadoop ensure fault tolerance?

Hadoop ensures fault tolerance through data replication. The Hadoop Distributed File System (HDFS) replicates each data block across multiple nodes. If one node fails, the system retrieves the data from other replicated nodes, ensuring uninterrupted operations.

3. Can Hadoop handle real-time data processing?

While Hadoop excels in batch processing, it is not optimized for real-time data processing. However, tools like Apache Spark and Apache Storm, which are part of the Hadoop ecosystem, can handle real-time data streaming effectively.

4. Is Hadoop suitable for small datasets?

Hadoop is not ideal for small datasets due to its high setup and resource overhead. It is best suited for large-scale data processing tasks where its distributed computing capabilities can be fully utilized.

5. What programming languages are supported by Hadoop?

Hadoop is primarily written in Java but also supports other languages like Python, C++, Scala, and Ruby. Tools in the Hadoop ecosystem, such as Hive and Pig, provide SQL-like and high-level scripting capabilities for ease of use.